The tectonic plates of higher education have shifted.

Walk into any university staff room today, and beneath the usual chatter about grading and schedules, there is a low-level hum of anxiety. It’s the sound of an entire sector realizing that the tools we use to measure intelligence, the essay, the problem set, the code snippet, can now be generated by a machine in seconds, often better than our average students can do in hours.

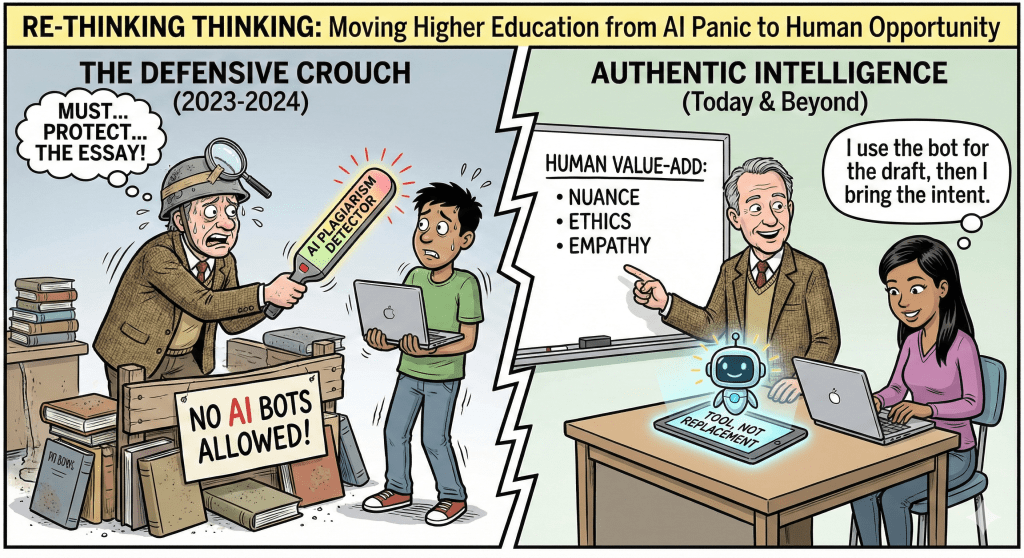

For two years, the collective response of the academy has oscillated between paralyzing fear and frantic reaction. We have banned tools, purchased questionable detection software, and held numerous emergency committee meetings. We have been stuck in a defensive crouch, trying to protect the old ways of doing things against an unstoppable wave of technological change.

It is time to stop asking, “How do we prevent students from using AI?” and start asking the only question that matters: “In a world where machines can generate content instantly, what does it mean to think authentically as a human?”

The way we are talking about AI right now isn’t really about technology. It is about rationalization.

The Mirror in the Machine: What Our Reaction Says About Us

Our debates about AI are rarely as logical as we claim; they are intellectual structures built to hide deep, instinctual emotions. Much of the resistance to these tools stems from an existential fear of obsolescence, but rather than admitting we are terrified of being replaced, we construct noble defenses. We deploy arguments about the human “soul” or use specific ethical flaws as shields, constantly moving the goalposts on creativity to ensure that whatever the machine does doesn’t count as “real” work. We use logic not to find the truth, but to protect our ego and our status.

Conversely, those rushing to embrace GenAI are often driven by impulses such as laziness or the Fear Of Missing Out, yet we dress these up as professional necessity. We soothe the cognitive dissonance of cutting corners by insisting the AI is “just a tool” or a “sparring partner,” rationalizing our prompt-writing as genuine creation even when we accept the output wholesale. We lean on fatalism—claiming we have no choice but to adopt the tech or be left behind—which conveniently absolves us of the responsibility to ask whether we should be using it in the first place.

The profound irony is that while AI is trying to mimic human logic, we are busy masking our human emotions with logic. Our arguments about data and copyright are often just surface-level proxies for our deeper psychological needs for certainty and autonomy. This series aims to break that cycle of rationalization. We must stop using logic to hide our fear or our guilt, and instead look clearly at the mirror the machine holds up to us so we can define what true Authentic Intelligence actually looks like.

a New Standard: “Authentic Intelligence”

For too long, the debate has been trapped in a binary: “Pro-AI” (letting machines do everything) versus “Anti-AI” (banning machines entirely). Both approaches are destined to fail. The former leads to atrophy; the latter leads to obsolescence.

We advocate a third way: Authentic Intelligence.

What is it?

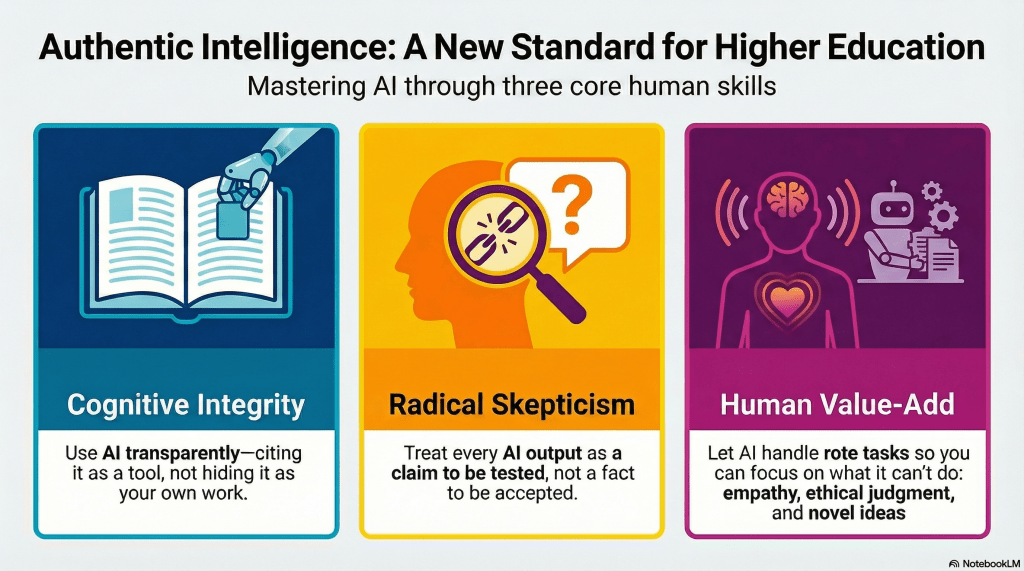

Authentic Intelligence is the active, ethical, and critical management of artificial tools. It is the ability to integrate AI into your workflow to expand your capabilities without surrendering your agency, your voice, or your verification standards.

It is the realization that while AI can generate content, only a human can generate intent. Authentic Intelligence is built on three pillars:

- Cognitive Integrity (Honesty): The refusal to pass off automated processing as human labor. An Authentically Intelligent student or researcher uses AI transparently—citing it as a tool, much like a calculator or a bibliography, rather than hiding it like a secret weapon.

- Radical Skepticism (Critical Thinking): The shift from passive consumption of information to active investigation. It treats every AI output as a claim to be tested, not a fact to be accepted. If you cannot verify it, you do not use it.

- Human Value-Add (Agency): The understanding that AI output is the floor, not the ceiling. Authentic Intelligence means using the machine to handle rote, structural, or repetitive tasks, freeing the human mind to focus on what algorithms cannot do: nuance, empathy, ethical judgment, and novel synthesis.

We are not here to deny the disruption. We are here to master it. Our goal is to move higher education away from fear of replacement and toward confidence in augmentation.

Who This Series Is For

We crafted this series to speak directly to the entire higher education ecosystem, as this challenge requires a unified response.

- To the Lecturers and Faculty: You didn’t get a PhD to become a forensic investigator hunting for AI plagiarism. You want to teach. This series offers concrete classroom strategies to move beyond the “policing” mindset and reignite the joy of mentorship, with a focus on skills that AI cannot replicate.

- To Academic Leaders and Administrators: You are facing existential questions about strategy, budget, and equity. How do you ensure a level playing field when some students can afford a “super-tutor” for €20 a month, while others cannot? We provide frameworks for institutional policies that protect your values and your students.

- To the Students: You are being told that the skills you are going into debt to learn might be automated before you even graduate. We will show you how to use these tools without being replaced by them, and how to become the “future-proof” graduate that employers are desperate for.

- To the General Reader: If you care about the future of truth, creativity, and how the next generation will learn to make sense of the world, this conversation belongs to you, too.

The Roadmap Ahead

Over the next eight posts, I will tackle the most critical friction points between human minds and machine learning, moving from diagnosis to solution.

The era of “business as usual” in education is over. That is terrifying, yes. But it is also a significant opportunity to eliminate busywork and refocus on the university’s core mission: developing minds that can handle complexity with integrity.

Let’s start re-thinking thinking.

Disclaimer: These posts present my perspective on the transformative potential of artificial intelligence (AI) in reshaping educational practices, particularly at the university level, through constructive alignment. It was developed using a multi-stage, AI-assisted process that combined human insights with interactions across various AI systems. This collaborative approach aimed to ensure clarity, coherence, and relevance while significantly reducing the effort required to structure and articulate ideas effectively. The creation process used Gemini to identify relevant references in current academic discourse. The AI-generated outputs underwent manual revisions and refinements. The foundational questions and ideas originated from human-generated content.