There is a new, uncomfortable silence in university hallways.

It happens when a professor reads an essay that is just a little too perfect. The grammar is flawless. The structure is rigid. The vocabulary is impressive but slightly soulless.

The professor thinks, “Did they write this? Or did a machine?”

The student, who perhaps spent hours writing it but writes in a formal style (or is a non-native speaker), thinks: “If I turn this in, will I be accused of cheating?”

In our fourth post on Re-thinking thinking: Authentic Intelligence, we are tackling the Trust Gap. The rise of GenAI has triggered a crisis of suspicion that threatens to break the most critical relationship in education: the bond between the mentor and the learner.

The “Detection” Trap

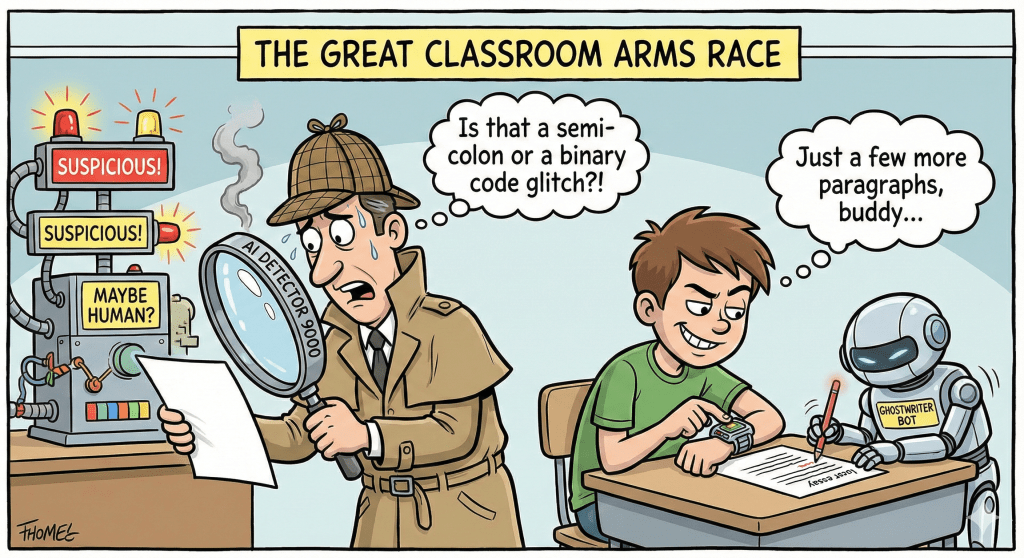

In 2023 and 2024, the immediate reaction of higher education was to fight fire with fire. We turned to AI Detectors, software that purports to tell us whether a text is human or synthetic.

By now, we know this was a mistake.

Research has repeatedly shown that AI detectors are:

- Unreliable: They yield “false positives,” accusing honest students of cheating. This is especially true for non-native English speakers, whose writing often follows patterns that algorithms flag as “artificial.”

- Easily Bypassed: A student can simply ask an AI to “rewrite this to bypass detection,” and the software is fooled.

We are currently locked in a Plagiarism Arms Race. We build a higher wall. The AI creates a taller ladder. The only result is that teachers are spending more time acting as forensic detectives than as educators, and students are spending more time worrying about proving their work than actually doing it.

The Real Cost: The “Social Contract”

Education relies on a fragile social contract. The teacher agrees to guide and grade fairly; the student agrees to try.

When a teacher has to run every assignment through a “suspicion machine,” that contract breaks.

- If a student feels untrusted, they disengage.

- If a teacher feels duped, they become cynical.

- The classroom becomes a courtroom.

We cannot investigate our way out of this problem. We have to design our way out of it.

The Solution: Radical Transparency

If we can’t stop the tools and cannot reliably detect them, we must regulate them.

The strategic shift for Authentic Intelligence is to move from Prohibition (“Don’t use it”) to Transparency (“Don’t hide it”).

Here is how we rebuild trust:

1. The “Citation & Declaration” Policy

Instead of a blanket ban, introduce a “Methods” requirement for every assignment.

- The Strategy: Students may use AI for specific phases (brainstorming, outlining, or copyediting), provided they include a “Declaration of AI Use” at the end of the paper.

- The Format: “I used ChatGPT to generate ideas for the introduction and to check my citations. The final drafting was done by me. See Appendix A for the prompt history.”

- The Outcome: This removes the fear. Students don’t have to sneak around. If they use it lazily, the professor can grade them on poor methodology, not academic misconduct.

2. Redefining Plagiarism

We need to update our honor codes for the modern age.

- Old Definition: Plagiarism is passing off another person’s words as your own.

- New Definition: Plagiarism is the presentation of automated labor as intellectual labor.

- The Strategy: Be clear that using AI isn’t necessarily “cheating”—it’s a tool. But misrepresenting the source of the work is a lie. We punish the lie, not the tool.

3. The “Oral Defense” (The Ultimate Verifier)

As mentioned in Post 1, the only unhackable AI detector is a conversation.

- The Strategy: If a professor suspects a paper is AI-generated, don’t rely on a software score. Invite the student for a 5-minute chat. Ask them to explain a specific paragraph.

- The Outcome: An honest student will be able to explain their thoughts. A student who “copy-pasted” will not. This restores the human element to the adjudication process.

The Bottom Line

We need to stop trying to catch students and start trying to teach them.

The goal of a university is to prepare students for the real world. In practice, they will likely use AI. Our job is to teach them to use it ethically, transparently, and effectively, not to deny its existence.

Let’s lay down our weapons in the arms race and start talking about integrity again.