Imagine a student named Sarah. She has spent six months working on a groundbreaking Master’s thesis proposing a novel solution to urban water waste. It is brilliant, original, and potentially patentable.

Before submitting it, she wants to clean up the grammar. She copies the entire 50-page document and pastes it into a free AI chatbot with the prompt: “Fix the spelling and make the tone more academic.”

The AI does a great job. Sarah is happy.

But Sarah just made a terrible mistake. She didn’t just get a grammar check; she potentially handed over her intellectual property to a trillion-dollar corporation. She just voluntarily fed her original research into the maw of a machine that will use her ideas to train itself to be smarter—without paying her a dime.

In our seventh post on Re-thinking thinking: Authentic Intelligence, we need to talk about Data Sovereignty.

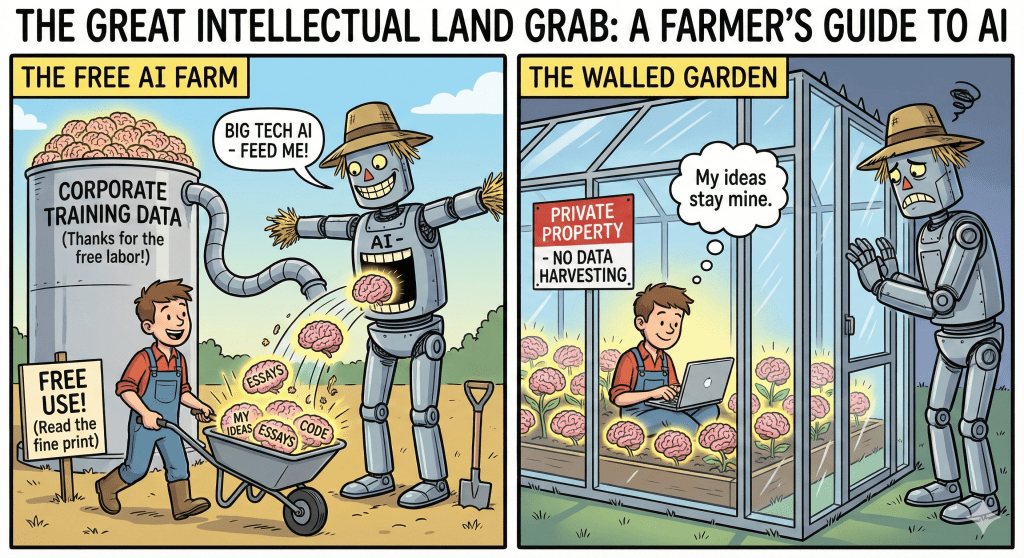

We often say, “If the product is free, you are the product.” In the age of AI, this is no longer accurate. You aren’t just the product; you are the unpaid trainer.

The Hidden Business Model

Why do tech giants give away the most powerful software in human history for free (or for €20)?

It is not out of charity. This is because they have exhausted public internet data for model training. They have already scraped all of Wikipedia, all of Reddit, and all of the open web. To make the models smarter, they need something more valuable: private, high-quality human reasoning.

They need your emails. They need your code. They need your essays. They need your edits.

Every time a student debates an AI, corrects its mistake, or feeds it a draft, they are performing Reinforcement Learning from Human Feedback (RLHF). They are effectively working a micro-shift at a data center, polishing the algorithm for the next version.

The Risks: From Leaks to Theft

For universities, this presents two massive risks:

- The Privacy Nightmare: In the corporate world, employees have already accidentally leaked trade secrets by asking AI to summarize confidential meeting notes. In a university, what happens when a medical student pastes patient data into a chatbot? Or a psychology student uploads interview transcripts with vulnerable subjects? That data leaves the university’s secure server and enters a “black box” we cannot control.

- The IP “Land Grab”: If a researcher uses a public AI to help write a grant proposal for a new invention, and that data is used to train the model, who owns the invention? The terms of service are often deliberately vague. We risk a future in which the university’s collective brainpower is slowly siphoned into proprietary datasets owned by Silicon Valley.

The Solution: Building “Walled Gardens”

We cannot stop using these tools. They are too helpful. But we must stop using them naively.

Here is the strategy for protecting our intellectual borders:

1. The “Walled Garden” Approach (Enterprise Contracts)

This is the single most important IT investment a university can make.

- The Strategy: Do not permit faculty or researchers to use the free or public versions of ChatGPT or Gemini for sensitive work. Instead, purchase Enterprise instances.

- The Difference: In an Enterprise instance, the contract legally stipulates that zero data is used for model training. The data stays within the university’s “wall.” It costs money, but the cost of losing your IP is infinitely higher.

2. The “Anonymization” Protocol

We must teach students “Data Hygiene” before they ever open a prompt window.

- The Strategy: Teach the [Bracket] Method. Before pasting text into AI, replace all specific names, locations, and proprietary terms with placeholders like

[Client A],[Proprietary Molecule X], or[University Name]. - The Lesson: You can use the AI for reasoning (the logic of the sentence), but never trust it with the specifics (the secrets).

3. The “Opt-Out” Literacy

Most users don’t know they can turn off the data tap.

- The Strategy: Every orientation session should include a 2-minute tutorial on how to navigate the “Settings” menu of major AI tools to turn off “Chat History & Training.”

- The Lesson: Default settings are designed to benefit the company, not the user. Authentic Intelligence requires taking control of the dashboard.

The Bottom Line

Your ideas are the only currency that matters in the knowledge economy.

When you type them into a free prompt box, you are minting money for someone else. We need to teach our students to value their own thoughts enough to protect them. Use the tool, sharpen the tool, but don’t become the tool.